Never take your children's online safety for granted

A couple of things happened this week which made me think again about online safety.

I think I have a fair handle on the subject, and a perspective which was well captured by Jim Gamble, the former head of the Child Exploitation and Online Protection Command (CEOP) Centre, in a quote from a 2019 TEDx talk he gave which says:

"Technology in essence is neutral. If you take the room we are in today is was fundamentally neutral when it was empty, but once you fill that room or that space, online or offline, with people, the risk is defined by the character of those individuals in the room." [a]

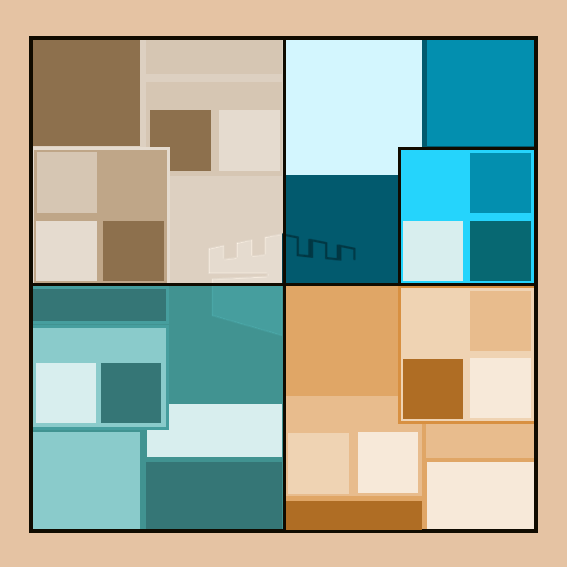

And he was right. When we think about any social space online, the risk to us as individuals comes from the access other people have to us, whether that's direct or indirect, and the opportunity they are given to post content which reaches us or to contact us directly. It's a risk to all of us, but children and adults at risk can be targeted deliberately by criminals with intent to abuse and exploit. Last month a BBC News investigation identified that "Children as young as nine have been added to malicious WhatsApp groups promoting self-harm, sexual violence and racism" [b] while it was reported globally that Instagram were taking steps to tackle the significant problem of sextortion on its platform [c]. And we also know that the algorithms which social media sites use to find content to engage us with can amplify the harmful content to vulnerable people.

However, our third child got their first phone recently. As a diligent parent I ensured I put parental controls on her phone and we agreed together that one of the conditions of her having a phone is that we, as her parents, will regularly look at what she is using it for and talk about that with them. This week, I received a notification that an AI character app had been downloaded. We spoke about it and they had in fact already uninstalled it - no harm done - but I honestly had no idea how sophisticated this was and what children are being opened up to. This app encourages its users to engage with characters as the go on a journey of "emotional exploration and self-discovery".

WHAT!! If I hadn't had the controls on the phone, or a child who perceived that something was crossing a boundary, a character, originally designed by any other user of the app, and then unleashed to grow and develop on the app's AI, might have taken her on that journey! The age rating of the app is 12, and officially users shouldn't be under 16, but there is no effective age verification in place once it has been have downloaded. You can turn on a 'teen mode' to prevent access to sexual content, but this isn't well promoted and children are unlikely to choose to do that themselves. But the opportunity for harm isn't only sexual.

The second thing that happened was I read about the item on BBC Breakfast (I didn't watch it and its sadly not available on iPlayer) where they brought together a group of parents whose children have died, at least in part due to harmful online content, to discuss the recent announcements from Ofcom about the application of the new online safety rules [d]. Some incredibly sad stories and some very bold and courageous parents, who are concerned about the length of time it has taken to get to this point, and about the length of time it will take for the measures to become effective. Ofcom's timeline states that the protection of children codes will come into force in the second half of 2025 [e].

While Ofcom's information tells service providers that "the rules apply to services that are made available over the internet... a website, app or another type of platform", it concerns me that because of the pace of change in technology we will always be catching up with new companies and new platforms looking to exploit space for their business interests. And while the rules cover harmful content, the breadth of this definition is limited. Legally, there is nothing wrong with AI powered characters taking our children on journeys of "emotional exploration and self-discovery". That's not what I want for my kids... I wouldn't invite someone I don't know into my home to talk one-to-one with my kids about anything and so I am not about let them into my home via an online platform or app to help them discover who they are! But without knowledge of this risk, that may have become what I have just done!

If you want to get some help with setting up controls, this is a helpful guide for from Uswitch and if you want to think about how to talk to children about their phone usage this article from the NSPCC is a great place to start.

[a] Online safety - it is not about the internet... | Jim Gamble | TEDxStormontWomen

[b] Nine-year-olds added to malicious WhatsApp groups | BBC News

[c] Instagram to blur nudity in messages in bid to protect teens | The Guardian

[d] Tech firms told to hide 'toxic' content from children | BBC News

[e] Ofcom's information for service providers

This is a subtitle for your new post

The body content of your post goes here. To edit this text, click on it and delete this default text and start typing your own or paste your own from a different source.